Multi-modal Emotion Recognition

Recognition of human emotions through multiple modalities

Overview

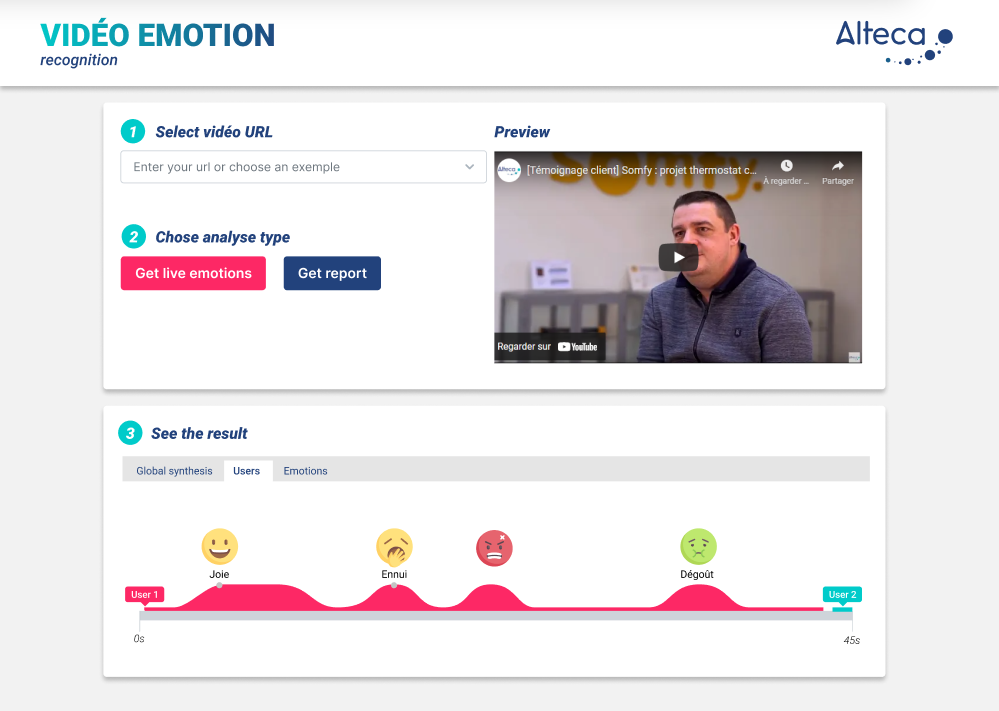

In this project, we are developing a multi-modal emotion recognition system that goes beyond traditional methods by incorporating multiple sources of input, such as facial expressions, voice tonality, and text analysis. The goal is to create a comprehensive and accurate model for understanding and interpreting human emotions in various contexts.

Multi-modal emotion recognition UI

Features

- Facial Expression Analysis:

- Detect and analyze facial expressions using computer vision techniques.

- Recognize key facial landmarks and expressions associated with different emotions.

- Audio Features Analysis:

- Utilize audio processing to capture and analyze the tonal variations in speech.

- Identify patterns in pitch, intensity, and tempo to infer emotional states.

- Textual Data Analysis:

- Process extracted text to infer emotional cues.

- Multi-Modal Fusion:

- Integrate information from facial expressions, audio features, and text to create a holistic understanding of the user’s emotional state.

- Develop fusion strategies to combine modalities effectively for improved accuracy.

- Real-Time Emotion Recognition:

- Implement algorithms that allow for real-time analysis of emotions as they unfold.

- Enable the system to provide instantaneous feedback based on the recognized emotions.

- Emotion Trend Analysis:

- Provide insights into the temporal patterns of emotions, allowing for trend analysis over time.

- Identify recurring emotional states and potential triggers.